Now where is the shovel head maker, TSMC?

Memes

Rules:

- Be civil and nice.

- Try not to excessively repost, as a rule of thumb, wait at least 2 months to do it if you have to.

And then China popping their head out claiming Taiwan is part of China because they want to seize TSMC

Eh, they'll have plenty of demand for their nodes regardless. Non-AI CPUs and GPUs are still going to want them.

meanwhile i just want cheap gpus for my bideogames again

You can buy them new for somewhat reasonable prices. What people should really look at is used 1080ti's on ebay. They're going for less than $150 and still play plenty of games perfectly fine. It's the budget PC gaming deal of the century.

it probably the best performance per dollar u can get but a lot of modern games are unplayable on it.

Lot of those games are also hot garbage. Baldur's Gate 3 may be the only standout title of late where you don't have to qualify what you like about it.

I think the recent layoffs in the industry also portend things hitting a wall; games aren't going to push limits as much as they used to. Combine that with the Steam Deck-likes becoming popular. Those could easily become the new baseline standard performance that games will target. If so, a 1080ti could be a very good card for a long time to come.

You're misunderstanding the issue. As much as "RTX OFF, RTX ON" is a meme, the RTX series of cards genuinely introduced improvements to rendering techniques that were previously impossible to pull-off with acceptable performance, and more and more games are making use of them.

Alan Wake 2 is a great example of this. The game runs like ass on 1080tis on low because the 1080ti is physically incapable of performing the kind of rendering instructions they're using without a massive performance hit. Meanwhile, the RTX 2000 series cards are perfectly capable of doing it. Digital Foundry's Alan Wake 2 review goes a bit more in depth about it, it's worth a watch.

If you aren't going to play anything that came out after 2023, you're probably going to be fine with a 1080ti, because it was a great card, but we're definitely hitting the point where technology is moving to different rendering standards that it doesn't handle as well.

So here's two links about Alan Wake 2.

First, on a 1080ti: https://youtu.be/IShSQQxjoNk?si=E2NRiIxz54VAHStn

And then on a Rog Aly (which I picked because it's a little more powerful than the current Steam Deck, and runs native Windows): https://youtu.be/hMV4b605c2o?si=1ijy_RDUMKwXKQQH

The Rog seems to be doing a little better, but not by much. They're both hitting sub 30fps at 720p.

My point is that if that kind of handheld hardware becomes typical, combined with the economic problems of continuing to make highly detailed games, then Alan Wake 2 is going to be an abberation. The industry could easily pull back on that, and I welcome it. The push for higher and higher detail has not resulted in good games.

Meanwhile I dont think I have played more than 30minutes on my ps5 this year and its june, and I have definitely not played any minutes on the 1080 sitting in my PC...

Oh fuck scratch that I may have played about 2 hours of Dune Spice Wars

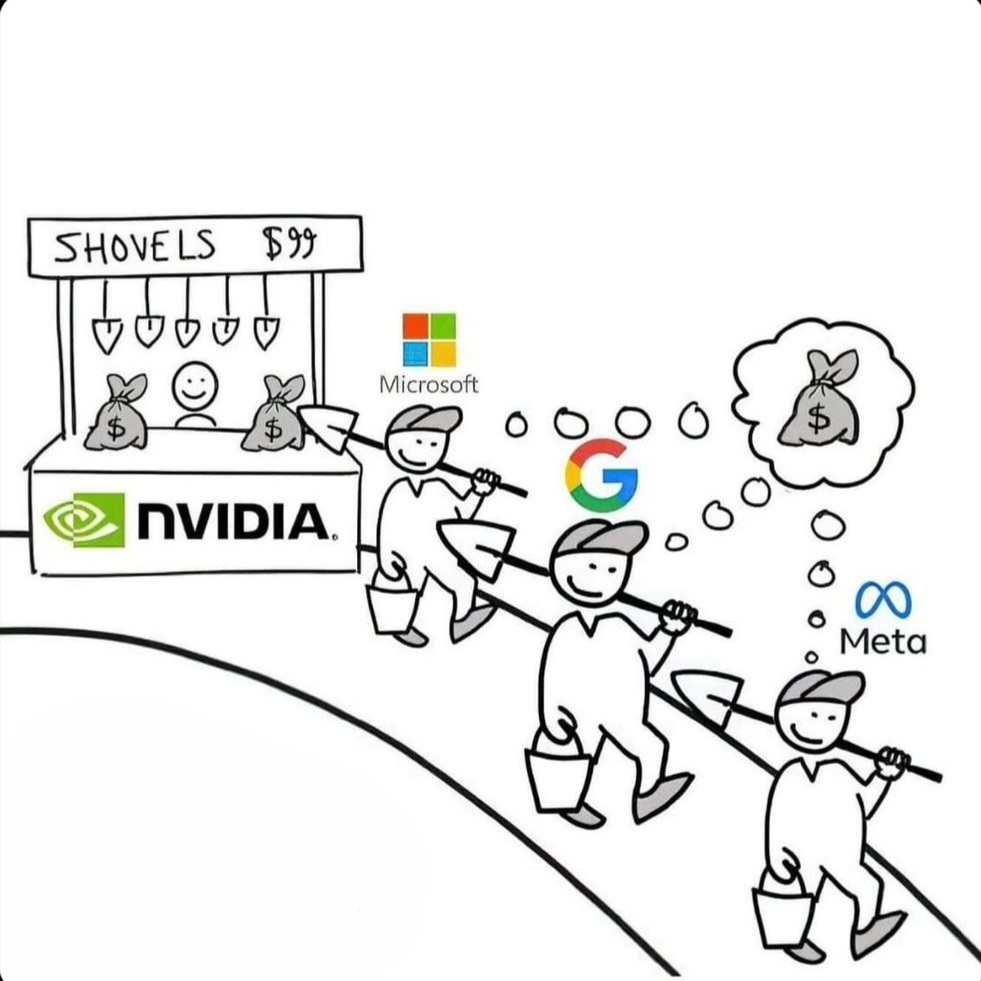

Nvidias being pretty smart here ngl

This is the ai gold rush and they sell the tools.

Yes that's the meme.

Edited the price to something more nvidiaish:

Gotta add a few more 9s to that. This is enterprise cards we're talking about

Literally about to do same.

Jensen also is obsessed with how much stuff weighs. So maybe he'd sell shovels by the ton.

Don't forget AMD, good potential if they bring out similar technology to compete with NVIDIA. Less so Intel, but they're in the GPU market too.

Does ARM do anything special with AI? Or is that just the actual chip manufacturers designing that themselves?

As I understand it, ARM chips are much more efficient on the same tasks, so they're cheaper to run.

They will eat massive shit when that AI bubble bursts.

I mean if LLM/Diffusion type AI is a dead-end and the extra investment happening now doesn't lead anywhere beyond that. Yes, likely the bubble will burst.

But, this kind of investment could create something else. We'll see. I'm 50/50 on the potential of it myself. I think it's more likely a lot of loud talking con artists will soak up all the investment and deliver nothing.

bubbles have nothing to do with technology, the tech is just a tool to build the hype. The bubble will burst regardless of the success of the tech at most success will slightly delay the burst, because what is bursting isnt the tech its the financial structures around it.

It's looking like a dead end. The content that can be fed into the big LLMs has already been done. New stuff is a combination of actual humans and stuff generated by LLMs. It then runs into an ouroboros problem where it just eats its own input.

I mostly agree, with the caveat that 99% of AI usage today just stupid gimmicks and very few people or companies are actually using what LLMs offer effectively.

It kind of feels like when schools got sold those Smart Whiteboards that were supposed to revolutionize teaching in the classroom, only to realize the issue wasn't the tech, but the fact that the teachers all refused to learn and adapt and let the things gather dust.

I think modern LLMs should be used almost exclusively as an assistive tool to help empower a human worker further, but everyone seems to want an AI that you can just tell 'do the thing' and have it spit out a finalized output. We are very far from that stage in my opinion, and as you stated LLM tech is unlikely to get us there without some sort of major paradigm shift.

only to realize the issue wasn’t the tech

To be fair, electronic whiteboards are some of the jankiest piles of trash I've ever had to use. I swear to God you need to re-calibrate them every 5 minutes.

I doubt it. Regardless of the current stage of machine learning, everyone is now tuned in and pushing the tech. Even if LLMs turn out to be mostly a dead end, everyone investing in ML means that the ability to do LOTS of floating point math very quickly without the heaviness of CPU operations isn’t going away any time soon. Which means nVidia is sitting pretty.

the WWW wasn't a dead end but the bubble burst anyway. the same will happen to AI because exponential growth is impossible.

See Sun Microsystems after the .com bubble burst. They produced a lot of the servers that .com companies were using at the time. Shriveled up after and were eventually absorbed by Oracle.

Why did Oracle survive the same time? Because they latched onto a traditional Fortune 500 market and never let go down to this day.

Worst one is probably Apple. They just announced "Apple Intelligence" which is just ChatGTP whose largest shareholder is Microsoft. Figure that one out.

Well, most of the requests are handled on device with their own models. If it’s going to ChatGPT for something it will ask for permission and then use ChatGPT.

So the Apple Intelligence isn’t all ChatGPT. I think this deserves to be mentioned as a lot of the processing will be on device.

Also, I believe part of the deal is ChatGPT can save nothing and Apple are anonymising the requests too.

If you think that’s the WORST ONE, you have no idea about any of this

Yeah, if anything, Apple is behind the curve. Nvidia/AMD/Intel have gone full cocaine nose dive into AI already.

Not true. Most if not all requests are handled by apples own models on device or on their own servers. When it does use OpenAI you need to give it permission each time it does.

That's just not true. Most requests are handled on-device. If the system decides a request should go to ChatGPT, the user is promped to agree and no data is stored on OpenAI's servers. Plus, all of this is opt-in.

Admittedly, I bought an Nvidia card for AI. I am part of the problem.

I don't think it's a problem, more like a situation. You are not doing anything wrong or stupid, just interested in something new and promising and have the resources to pursue it. Good for you, may you find gold.

Serious Question:

Why is Nvidia AI king and I see nothing of AMD for AI?

I'm an AI Developer.

TLDR: CUDA.

Getting ROCM to work properly is like herding cats.

You need a custom implementation for the specific operating system, the driver version must be locked and compatible, especially with a Workstation / WRX card, the Pro drivers are especially prone to breaking, you need the specific dependencies to be compiled for your variant of HIPBlas, or zLUDA, if that doesn't work, you need ONNX transition graphs, but then find out PyTorch doesn't support ONNX unless it's 1.2.0 which breaks another dependency of X-Transformers, which then breaks because the version of HIPBlas is incompatible with that older version of Python and ..

Inhales

And THEN MAYBE it'll work at 85% of the speed of CUDA. If it doesn't crash first due to an arbitrary error such as CUDA_UNIMPEMENTED_FUNCTION_HALF

You get the picture. On Nvidia, it's click, open, CUDA working? Yes?, done. You don't spend 120 hours fucking around and recompiling for your specific usecase.

Also, you need a supported card. I have a potato going by the name RX 5500, not on the supported list. I have the choice between three rocm versions:

- An age-old prebuilt, generally works, occasionally crashes the graphics driver, unrecoverably so... Linux tries to re-initialise everything but that fails, it needs a proper reset. I do need to tell it to pretend I have a different card.

- A custom-built one, which I fished out of a docker image I found on the net because I can't be arsed to build that behemoth. It's dog-slow, due to using all generic code and no specialised kernels.

- A newer prebuilt, any. Works fine for some, or should I say, very few workloads (mostly just BLAS stuff), otherwise it simply hangs. Presumably because they updated the kernels and now they're using instructions that my card doesn't have.

#1 is what I'm actually using. I can deal with a random crash every other day to every other week or so.

It really would not take much work for them to have a fourth version: One that's not "supported-supported" but "we're making sure this things runs": Current rocm code, use kernels you write for other cards if they happen to work, generic code otherwise.

Seriously, rocm is making me consider Intel cards. Price/performance is decent, plenty of VRAM (at least for its class), and apparently their API support is actually great. I don't need cuda or rocm after all what I need is pytorch.

Simple Answer:

Cuda

So, AMD has started slapping the AI branding on to some of their products, but they haven’t leaned in to it quite as hard as Nvidia has. They’re still focusing on their core product line up and developing the actual advancements in chip design.