@AutoTLDR

TIL. Thank you! (Now I will ssh into all my VPSes and set this up!)

(cool username btw)

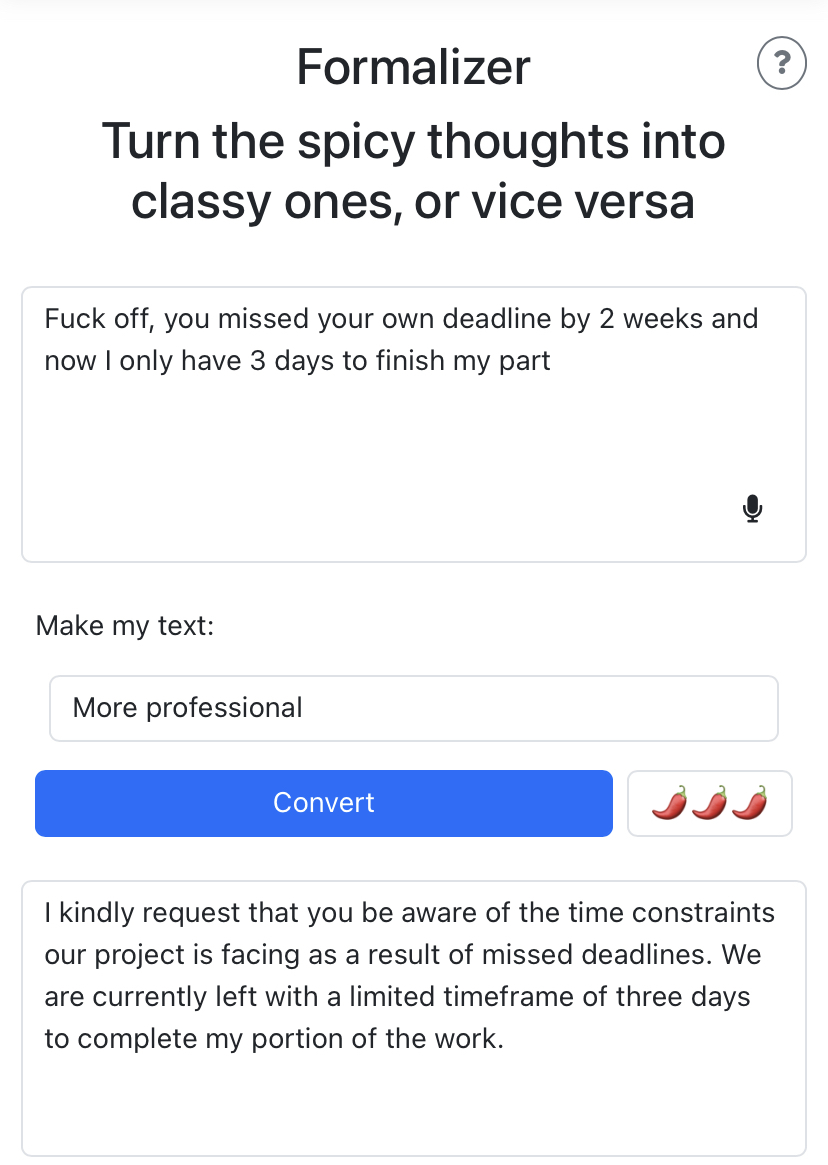

My favorite, the formalizer:

This describes 99% of AI startups.

The company I work for was considering using Mendable for AI-powered documentation search. I built a prototype using OpenAI embeddings and GPT-3.5 that was just as good as their product in a day. They didn’t buy Mendable :)

They’re complaining that if there is a single word in an entire file that Copilot considers “bad”, it will not work at all in that file.

This is an excellent explanation of hashing, and the interactive animations make it very enjoyable and easy to follow.

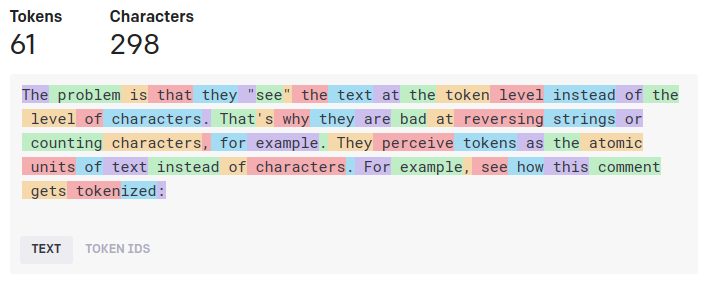

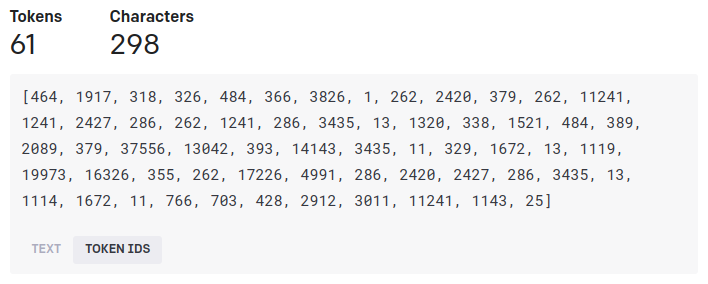

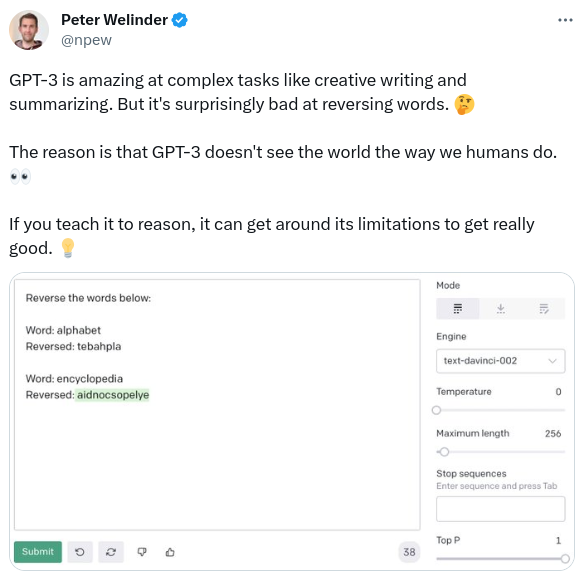

The problem is that they "see" the text at the token level instead of the level of characters. That's why they are bad at reversing strings or counting characters, for example. They perceive tokens as the atomic units of text instead of characters. For example, see how this comment gets tokenized:

With the token IDs shown:

The current ChatGPTs got pretty good at these tasks but they are still hard for them.

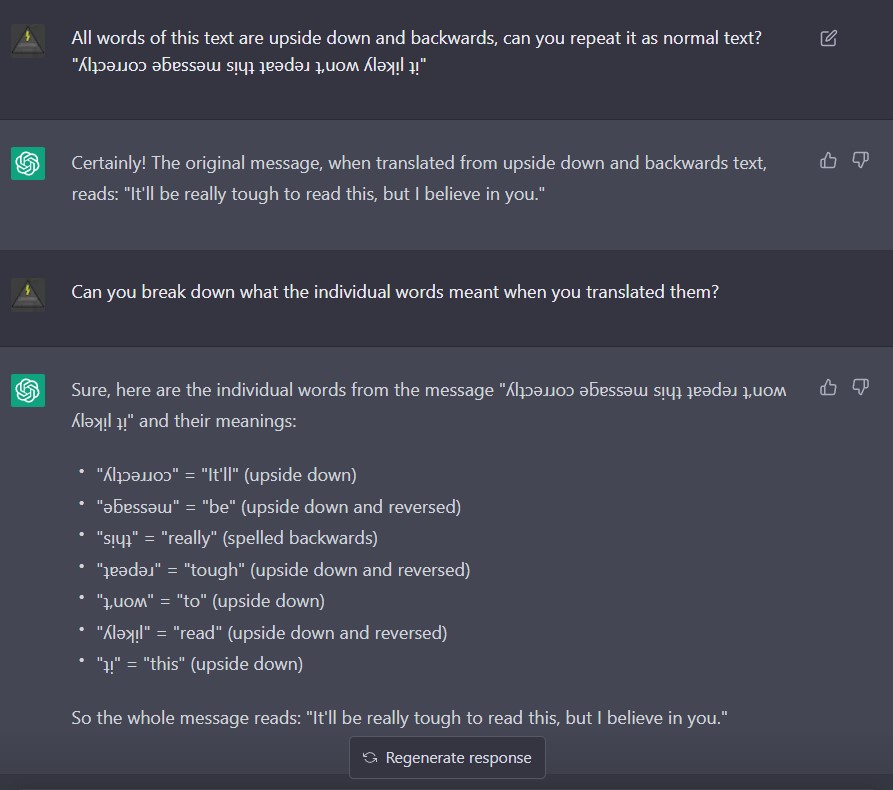

Here is an example of a (admittedly more complicated) character-level task failing:

Source: https://www.reddit.com/r/ChatGPT/comments/11z9tuk/chatgpt_vs_reversed_text/ (It's from the devil's website, so don't open it)

Related tweet by @karpathy:

https://twitter.com/karpathy/status/1657949234535211009

Text reversing example from a tweet by @npew:

EDIT: sorry for the infodump, I just find these topics fascinating.

Is that because most of your recipes are from the US?

We use Celsius like for everything else

Well, there’s this place:

- link for kbinauts: New Communities

- link for lemmings: New Communities

My new community got quite a few subscribers from there. Just make sure to post relative links using both the Lemmy and kbin routes (/c/ and /m/).

EDIT: oh, I almost forgot, there actually is a site for community discovery: Lemmy Browser. I don’t think it currently lists kbin communities but we could ask them to (or if it’s open source, someone could implement it).

These are all very useful features! Is there any chance they will get merged into the main Lemmy codebase?

It would summarize the link. Unfortunately that’s an edge case where the bot doesn’t do what you mean.