I am probably unqualified to speak about this, as I am using an RX 550 low profile and a 768P monitor and almost never play newer titles, but I want to kickstart a discussion, so hear me out.

The push for more realistic graphics was ongoing for longer than most of us can remember, and it made sense for most of its lifespan, as anyone who looked at an older game can confirm - I am a person who has fun making fun of weird looking 3D people.

But I feel games' graphics have reached the point of diminishing returns, AAA studios of today spend millions of dollars just to match the graphics' level of their previous titles - often sacrificing other, more important things on the way, and that people are unnecessarily spending lots of money on electricity consuming heat generating GPUs.

I understand getting an expensive GPU for high resolution, high refresh rate gaming but for 1080P? you shouldn't need anything more powerful than a 1080 TI for years. I think game studios should just slow down their graphical improvements, as they are unnecessary - in my opinion - and just prevent people with lower end systems from enjoying games, and who knows, maybe we will start seeing 50 watt gaming GPUs being viable and capable of running games at medium/high settings, going for cheap - even iGPUs render good graphics now.

TLDR: why pay for more and hurt the environment with higher power consumption when what we have is enough - and possibly overkill.

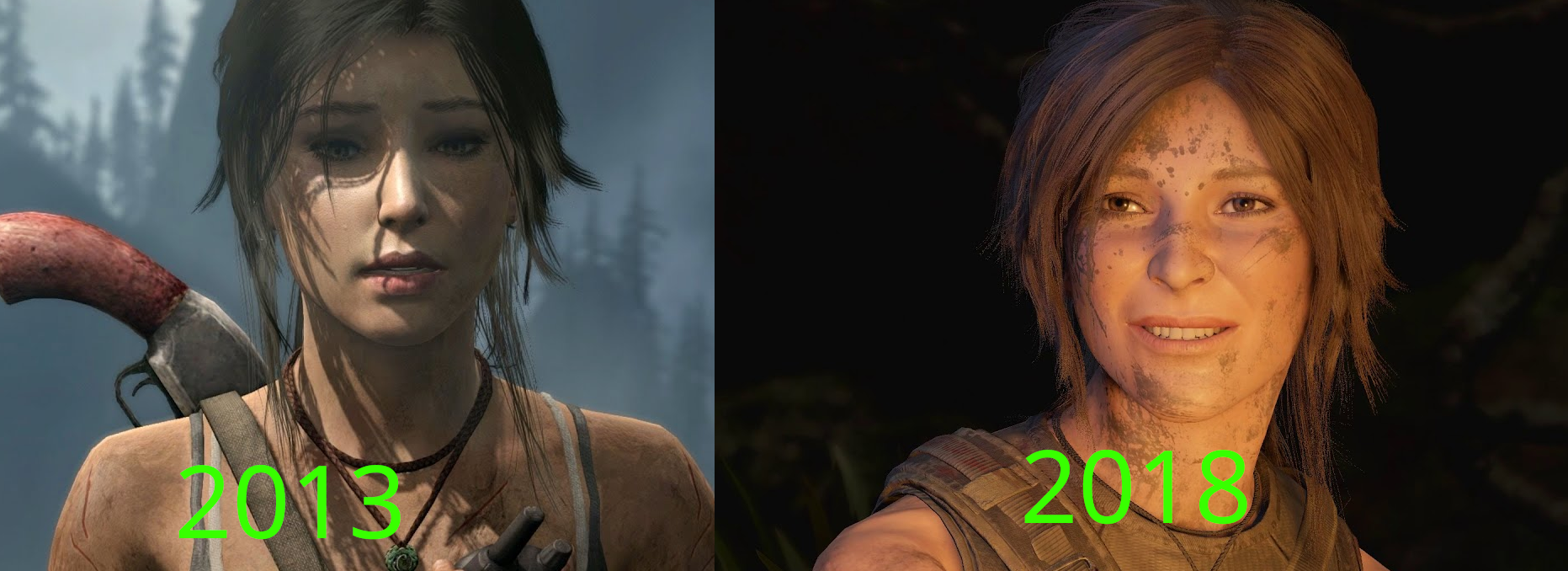

Note: it would be insane of me to claim that there is not a big difference between both pictures - Tomb Raider 2013 Vs Shadow of the Tomb raider 2018 - but can you really call either of them bad, especially the right picture (5 years old)?

Note 2: this is not much more that a discussion starter that is unlikely to evolve into something larger.

I think in some cases there's a lot of merit to it, for example Red Dead Redemption, both games are pretty graphically intensive (if not cutting edge) but it's used to further the immersion of the game in a meaningful way. Red Dead Redemption 2 really sells this rich natural environment for you to explore and interact with and it wouldn't quite be the same game without it.

Also that example of Tomb Raider is really disingenuous, the level of fidelity in the environments is night and day between the two as well as the quality of animation. In your example the only real thing you can tell is the skin shaders, which are not even close between the two, SotTR really sells that you are looking at real people, something the 2013 game approached but never really achieved IMO.

if you don't care then good for you! My wallet wishes I didn't but it's a fun hobby nontheless to try and push things to their limits and I am personally fascinated by the technology. I always have some of the fastest hardware every other generation and I enjoy playing with it and doing stuff to make it all work as well as possible.

You are probably correct in thinking for the average person we are approaching a point where they just really don't care, I just wish they would push for more clarity in image presentation at this point, modern games are a bit of a muddy mess sometimes especially with FSR/DLSS

It mattered a lot more early on because doubling the polygon count on screen meant you could do a lot more gameplay wise, larger environments, more stuff on screen etc. these days you can pretty much do what you want if you are happy to drop a little fidelity in individual objects.

I've noticed this a lot in comparisons claiming to show that graphics quality has regressed (either over time, or from an earlier demo reel of the same game), where the person trying to make the point cherry-picks drastically different lighting or atmospheric scenarios that put the later image in a bad light. Like, no crap Lara looks better in the 2013 image, she's lit from an angle that highlights her facial features and inexplicably wearing makeup while in the midst of a jungle adventure. The Shadow of the Tomb Raider image, by comparison, is of a dirty-faced Lara pulling a face while being lit from an unflattering angle by campfire. Compositionally, of course the first image is prettier -- but as you point out, the lack of effective subsurface scattering in the Tomb Raider 2013 skin shader is painfully apparent versus SofTR. The newer image is more realistic, even if it's not as flattering.

I'm someone who doesn't care about graphics a whole lot. I play most modern games at 1080p Mid/high on my RTX 3060.

And yet, I totally agree with your points. Many times, older games had rich looking environment from a distance, but if you go close or try to interact with it, it just breaks the illusion. Like, leaves can't move independently or plants just don't react to your trampling then etc.

A lot of graphical improvements are also accompanied with improvements in how elements interact with other elements in the game. And that definitely adds to the immersion, when you can feel like you're a part of the environment.