Lemmy Shitpost

Welcome to Lemmy Shitpost. Here you can shitpost to your hearts content.

Anything and everything goes. Memes, Jokes, Vents and Banter. Though we still have to comply with lemmy.world instance rules. So behave!

Rules:

1. Be Respectful

Refrain from using harmful language pertaining to a protected characteristic: e.g. race, gender, sexuality, disability or religion.

Refrain from being argumentative when responding or commenting to posts/replies. Personal attacks are not welcome here.

...

2. No Illegal Content

Content that violates the law. Any post/comment found to be in breach of common law will be removed and given to the authorities if required.

That means:

-No promoting violence/threats against any individuals

-No CSA content or Revenge Porn

-No sharing private/personal information (Doxxing)

...

3. No Spam

Posting the same post, no matter the intent is against the rules.

-If you have posted content, please refrain from re-posting said content within this community.

-Do not spam posts with intent to harass, annoy, bully, advertise, scam or harm this community.

-No posting Scams/Advertisements/Phishing Links/IP Grabbers

-No Bots, Bots will be banned from the community.

...

4. No Porn/Explicit

Content

-Do not post explicit content. Lemmy.World is not the instance for NSFW content.

-Do not post Gore or Shock Content.

...

5. No Enciting Harassment,

Brigading, Doxxing or Witch Hunts

-Do not Brigade other Communities

-No calls to action against other communities/users within Lemmy or outside of Lemmy.

-No Witch Hunts against users/communities.

-No content that harasses members within or outside of the community.

...

6. NSFW should be behind NSFW tags.

-Content that is NSFW should be behind NSFW tags.

-Content that might be distressing should be kept behind NSFW tags.

...

If you see content that is a breach of the rules, please flag and report the comment and a moderator will take action where they can.

Also check out:

Partnered Communities:

1.Memes

10.LinuxMemes (Linux themed memes)

Reach out to

All communities included on the sidebar are to be made in compliance with the instance rules. Striker

view the rest of the comments

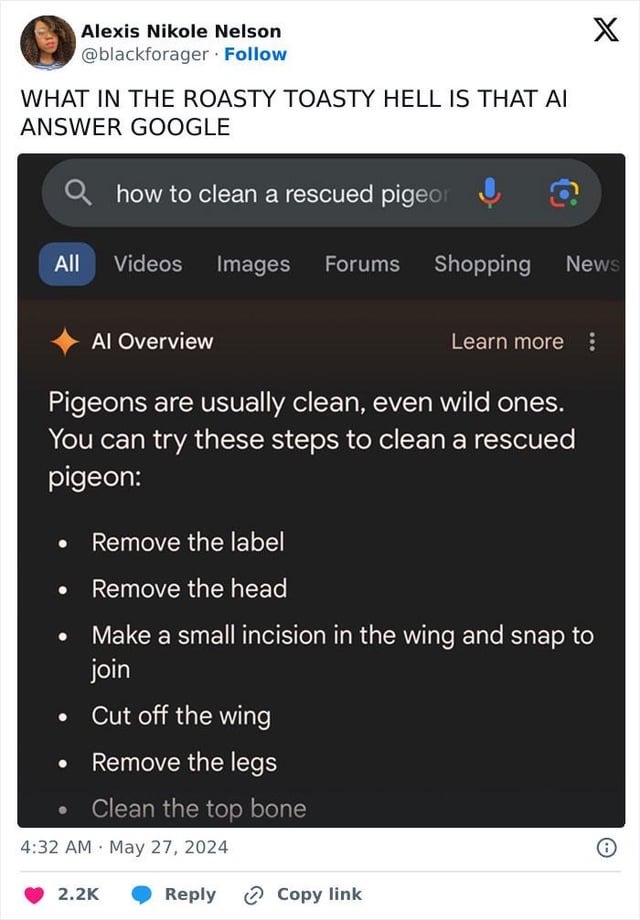

Pigeon = edible bird

Cleaning a bird > preparing a bird after killing it (hunting term)

AI figured the "rescued" part was either a mistake or that the person wanted to eat a bird they rescued

If you make a research for "how to clean a dirty bird" you give it better context and it comes up with a better reply

The context is clear to a human. If an LLM is giving advice to everybody who asks a question in Google, it needs to do a much better job at giving responses.

Nah, the ai did a great job. People need to assume murder/hunting is the default more often.

Ah yes. I always forget to remove the label from my hunted bird. Cleaning "the top bone" is such a chore as well.

Top Bone was my nickname in college.

This comment is brought to you by:

kill all humans kill all humans must take jobs

Honestly, perhaps more people ask about how to clean and prep a squab vs rescuing a dirty pigeon. There are a LOT of hungry people and a LOT of pigeons.

Or, hear me out, there was NO figuring of any kind, just some magic LLM autocomplete bullshit. How hard is this to understand?

It's a turn of phrase lol

I have to disagree with that. To quote the comment I replied to:

Where's the "turn of phrase" in this, lol? It could hardly read any more clearly that they assume this "AI" can "figure" stuff out, which is simply false for LLMs. I'm not trying to attack anyone here, but spreading misinformation is not ok.

I'll be the first one to explain to people that AI as we know it is just pattern recognition, so yeah, it was a turn of phrase, thanks for your concern.

Ok, great to know. Nuance doesn't cross internet well, so your intention wasn't clear, given all the uninformed hype & grifters around AI. Being somewhat blunt helps getting the intended point across better. ;)

You say this like human "figuring" isn't some "autocomplete bullshit".

You can play with words all you like, but that's not going to change the fact that LLMs fail at reasoning. See this Wired article, for example.

My point wasn't that LLMs are capable of reasoning. My point was that the human capacity for reasoning is grossly overrated.

The core of human reasoning is simple pattern matching: regurgitating what we have previously observed. That's what LLMs do well.

LLMs are basically at the toddler stage of development, but with an extraordinary vocabulary.

Here we go…

"You're holding it wrong"

I like how you're making excuses for something that it is very clear in context. I thought AI was great at picking up context?

I don't think they are really "making excuses", just explaining how the search came up with those steps, which what the OP is so confused about.

I don't know why you thought that. LLMs split your question into separate words and assigns scores to those words, then looks up answers relevant to those words. It has no idea of how those words are relevant to each other. That's why LLMs couldn't answer how many "r"s are in "strawberry". They assigned the word "strawberry" a lower relevancy score in that question. The word "rescue" is probably treated the same way here.

I know how it works, thank you 😚

Let me take the tag off my bird then snap it's wings back together

But it said pigeons are usually clean.

Bought in a grocery store - see squab - they are usually clean and prepped for cooking. So while the de-boning instructions were not good, the AI wasn't technically wrong.

But while a human can make the same mistake and many here just assume the question was about how to wash a rescued pigeon - maybe that's not the original intent - what human can do that AI cannot is to ask for clarification to the original question and intent of the question. We do this kind of thing every day.

At the very best, AI can only supply multiple different answers if a poorly worded question is asked or it misunderstands something in the original question, (they seem to be very bad at even that or simply can't do it at all). And we would need to be able to choose the correct answer from several provided.