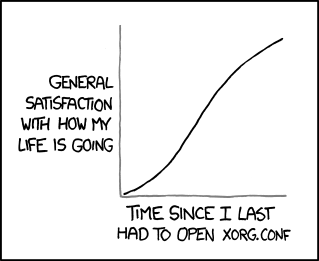

Happens all the time on Linux. The current instance would be the shift from X11 to Wayland.

The first thing I noticed was when the audio system switched from OSS to ALSA.

From Wikipedia, the free encyclopedia

Linux is a family of open source Unix-like operating systems based on the Linux kernel, an operating system kernel first released on September 17, 1991 by Linus Torvalds. Linux is typically packaged in a Linux distribution (or distro for short).

Distributions include the Linux kernel and supporting system software and libraries, many of which are provided by the GNU Project. Many Linux distributions use the word "Linux" in their name, but the Free Software Foundation uses the name GNU/Linux to emphasize the importance of GNU software, causing some controversy.

Community icon by Alpár-Etele Méder, licensed under CC BY 3.0

Happens all the time on Linux. The current instance would be the shift from X11 to Wayland.

The first thing I noticed was when the audio system switched from OSS to ALSA.

And then ALSA to all those barely functional audio daemons to PulseAudio, and then again to PipeWire. That sure one took a few tries to figure out right.

And the strangest thing about that is that neither PulseAudio nor Pipewire are replacing anything. ALSA and PulseAudio are still there while I handle my audio through Pipewire.

How is PulseAudio still there? I mean, sure the protocol is still there, but it’s handled by pipewire-pulse on most systems nowadays ~~(KDE specifically requires PipeWire)~~.

Also, PulseAudio was never designed to replace ALSA, it’s sitting on top of ALSA to abstract some complexity from the programs, that would arise if they were to use ALSA directly.

there are issues with the software we’re using that can only be remedied by massive changes or a complete rewrite.

I think this was the main reason for the Wayland project. So many issues with Xorg that it made more sense to start over, instead of trying to fix it in Xorg.

There is some Rust code that needs to be rewritten in C.

Strange. I’m not exactly keeping track. But isn’t the current going in just the opposite direction? Seems like tons of utilities are being rewritten in Rust to avoid memory safety bugs

Starting anything from scratch is a huge risk these days. At best you'll have something like the python 2 -> 3 ~~rewrite~~ overhaul (leaving scraps of legacy code all over the place), at worst you'll have something like gnome/kde (where the community schisms rather than adopting a new standard). I would say that most of the time, there are only two ways to get a new standard to reach mass adoption.

Retrofit everything. Extend old APIs where possible. Build your new layer on top of https, or javascript, or ascii, or something else that already has widespread adoption. Make a clear upgrade path for old users, but maintain compatibility for as long as possible.

Buy 99% of the market and declare yourself king (cough cough chromium).

Python 3 wasn't a rewrite, it just broke compatibility with Python 2.

The entire thing. It needs to be completely rewritten in rust, complete with unit tests and Miri in CI, and converted to a high performance microkernel. Everything evolves into a crab /s

I laughed waay too hard at this.

A PM said something similar earlier this week during a performance meeting: "I heard rust was fast. Maybe we should rewrite the software in that?"

Linux does this all the time.

ALSA -> Pulse -> Pipewire

Xorg -> Wayland

GNOME 2 -> GNOME 3

Every window manager, compositor, and DE

GIMP 2 -> GIMP 3

SysV init -> SystemD

OpenSSL -> BoringSSL

Twenty different kinds of package manager

Many shifts in popular software

Maybe not exaclly Linux, sorry for that, but it was first thing that get to my mind.

Web browsers really should be rewritten, be more modular and easier to modify. Web was supposed to be bulletproof and work even if some features are not present, but all websites are now based on assumptions all browsers have 99% of Chromium features implemented and won't work in any browser written from scratch now.

The same guys who create Chrome have stuffed the web standards with needlessly bloated fluff that makes it nearly impossible for anyone else to implement it. If alternative browsers have to be a thing again, we need a new standard, or at least the current standard with significantly large portions removed.

We haven't rewritten the firewall code lately, right? checks Oh, it looks like we have. Now it's nftables.

I learned ipfirewall, then ipchains, then iptables came along, and I was like, oh hell no, not again. At that point I found software to set up the firewall for me.

Damn, you're old. iptables came out in 1998. That's what I learned in (and I still don't fully understand it).

GUI toolkits like Qt and Gtk. I can't tell you how to do it better, but something is definitely wrong with the standard class hierarchy framework model these things adhere to. Someday someone will figure out a better way to write GUIs (or maybe that already exists and I'm unaware) and that new approach will take over eventually, and all the GUI toolkits will have to be scrapped or rewritten completely.

Idk man, I've used a lot of UI toolkits, and I don't really see anything wrong with GTK (though they do basically rewrite it from scratch every few years it seems...)

The only thing that comes to mind is the React-ish world of UI systems, where model-view-controller patterns are more obvious to use. I.e. a concept of state where the UI automatically re-renders based on the data backing it

But generally, GTK is a joy, and imo the world of HTML has long been trying to catch up to it. It's only kinda recently that we got flexbox, and that was always how GTK layouts were. The tooling, design guidelines, and visual editors have been great for a long time

It's actually a classic programmer move to start over again. I've read the book "Clean Code" and it talks about a little bit.

Appereantly it would not be the first time that the new start turns into the same mess as the old codebase it's supposed to replace. While starting over can be tempting, refactoring is in my opinion better.

If you refactor a lot, you start thinking the same way about the new code you write. So any new code you write will probably be better and you'll be cleaning up the old code too. If you know you have to clean up the mess anyways, better do it right the first time ....

However it is not hard to imagine that some programming languages simply get too old and the application has to be rewritten in a new language to ensure continuity. So I think that happens sometimes.

Yeah, this was something I recognized about myself in the first few years out of school. My brain always wanted to say "all of this is a mess, let's just delete it all and start from scratch" as though that was some kind of bold/smart move.

But I now understand that it's the mark of a talented engineer to see where we are as point A, where we want to be as point B, and be able to navigate from A to B before some deadline (and maybe you have points/deadlines C, D, E, etc.). The person who has that vision is who you want in charge.

Chesterton's Fence is the relevant analogy: "you should never destroy a fence until you understand why it's there in the first place."

Alsa > Pulseaudio > Pipewire

About 20 xdg-open alternatives (which is, btw, just a wrapper around gnome-open, exo-open, etc.)

My session scripts after a deep dive. Seriously, startxfce4 has workarounds from the 80ies and software rot affected formatting already.

Turnstile instead elogind (which is bound to systemd releases)

mingetty, because who uses a modem nowadays?

Be careful what you wish for. I’ve been part of some rewrites that turned out worse than the original in every way. Not even code quality was improved.

Some form of stable, modernized bluetooth stack would be nice. Every other bluetooth update breaks at least one of my devices.

There are many instances like that. Systemd vs system V init, x vs Wayland, ed vs vim, Tex vs latex vs lyx vs context, OpenOffice vs libreoffice.

Usually someone identifies a problem or a new way of doing things… then a lot of people adapt and some people don’t. Sometimes the new improvement is worse, sometimes it inspires a revival of the old system for the better…

It’s almost never catastrophic for anyone involved.

I would say the whole set of C based assumptions underlying most modern software, specifically errors being just an integer constant that is translated into a text so it has no details about the operation tried (who tried to do what to which object and why did that fail).

Cough, wayland, cough (X is just old and wayland is better)

Alt text: Thomas Jefferson thought that every law and every constitution should be torn down and rewritten from scratch every nineteen years--which means X is overdue.

Not too relevant for desktop users but NFS.

No way people are actually setting it up with Kerberos Auth