Well that sucks. I've always had a lingering respect for Mullenweg because I think Wordpress.com is a good service, I really like Simplenote, and I thought that Automattic taking over Tumblr could not make stuff worse after the service being gelded by the porn-haters.

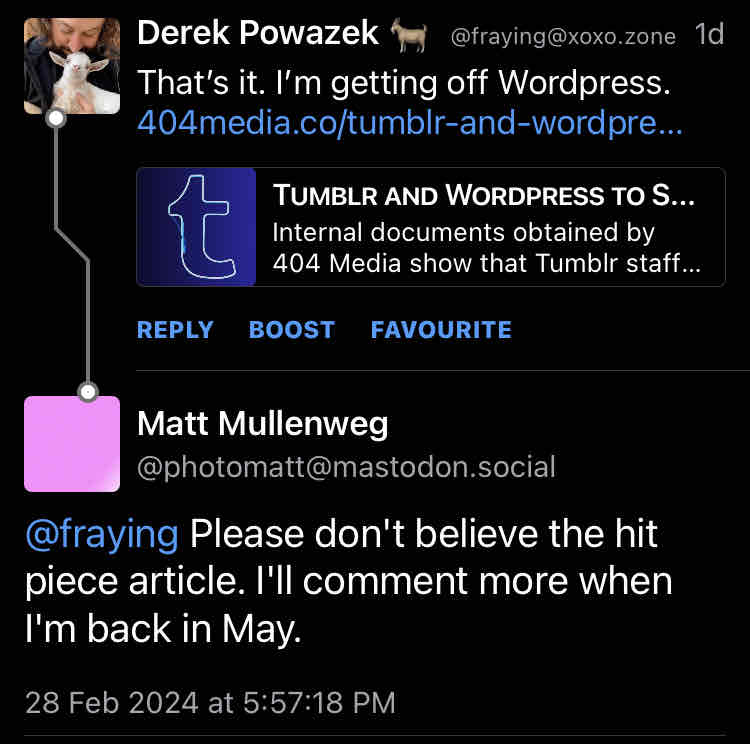

But his latest antics on moderation at Tumblr and now this (although he's hardly alone, Reddit is also gonna sell its user's contents to the AI mills) really showed his true face.

(I'll never forget laughing at him for losing tens of thousands worth of Leica gear in a lost/stolen luggage incident many years back)