this post was submitted on 21 Oct 2024

525 points (98.0% liked)

Facepalm

3289 readers

1 users here now

founded 2 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

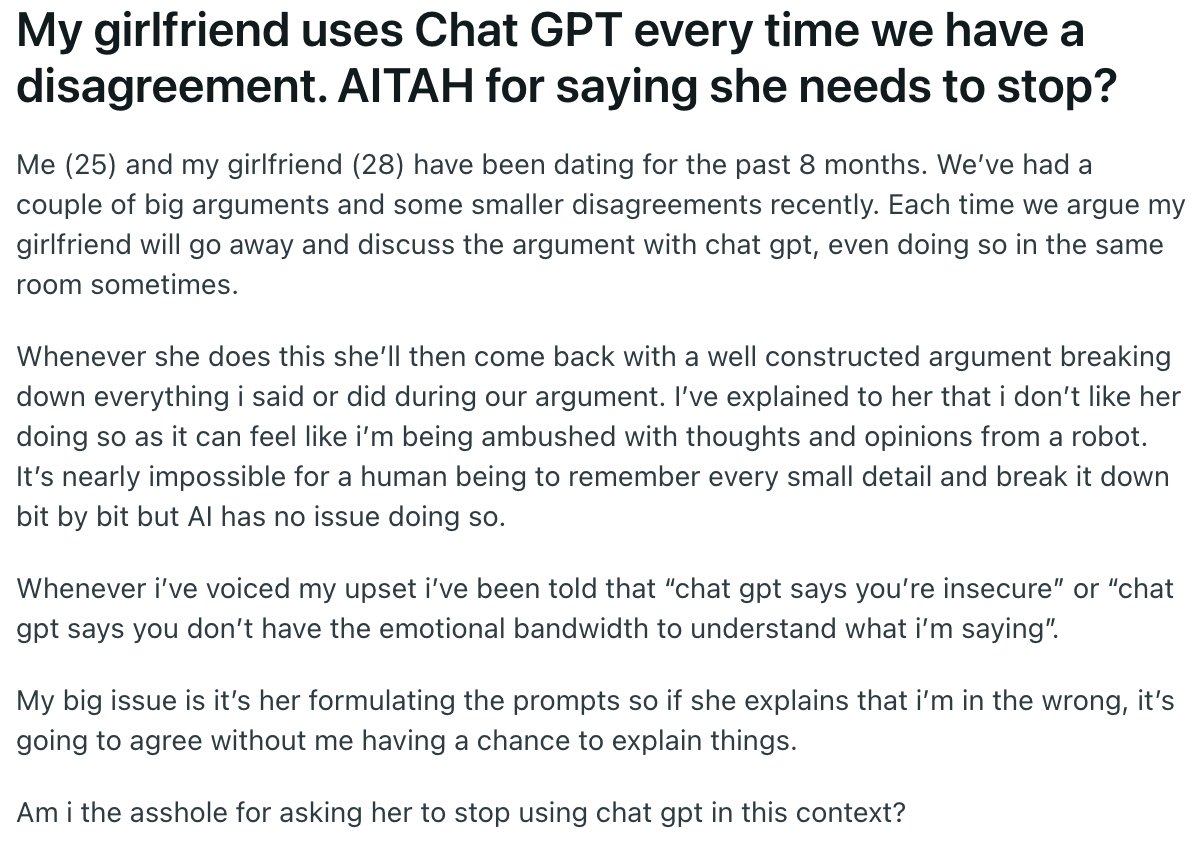

I was having lunch at a restaurant a couple of months back, and overheard two women (~55 y/o) sitting behind me. One of them talked about how she used ChatGPT to decide if her partner was being unreasonable. I think this is only gonna get more normal.

A decade ago she would have been seeking that validation from her friends. ChatGPT is just a validation machine, like an emotional vibrator.

The difference between asking a trusted friend for advice vs asking ChatGPT or even just Reddit is a trusted friend will have more historical context. They probably have met or at least interacted with the person in question, and they can bring i the context of how this person previously made you feel. They can help you figure out if you're just at a low point or if it's truly a bad situation to get out of.

Asking ChatGPT or Reddit is really like asking a Magic 8 Ball. How you frame the question and simply asking the question helps you interrogate your feelings and form new opinions about the situation, but the answers are pretty useless since there's no historical context to base the answers off of, plus the answers are only as good as the question asked.