Oops ;-)

thumdinger

Clocking in at 37 minutes. Don’t judge prog by the number of tracks… pretty typical album runtime for anything released in the vinyl era.

Also, it’s fantastic.

You mention frigate specifically. Were you running this on the system when the drive failed, or is this a future endeavour?

I bring this up because I also use frigate, and for some time I was running with a misconfigured docker compose that drove my SSD wearout to 40% in a matter of months.

Make sure that the tmpfs is configured per the frigate documentation and example config. If misconfigured like mine was, all of that IO is on disk. I believe the ramdisk is used for temp storage of camera streams, until an event occurs and the corresponding clip is committed to disk.

Good luck!

That’s great. Thank you again!

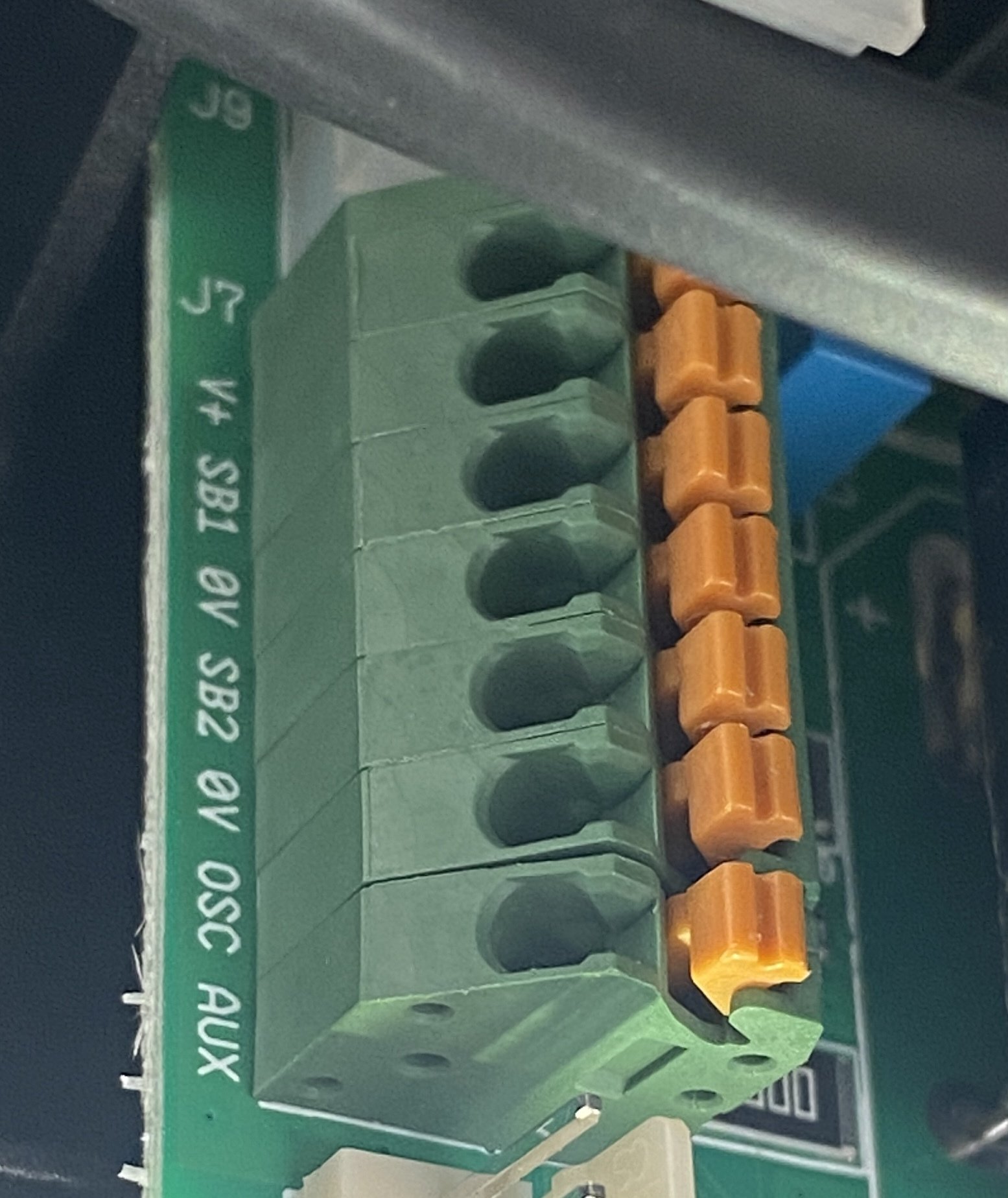

Thank you! Something concrete to go on. Is there a specific name these go by? I couldn’t find them by searching.

I think I have two issues to work through. I’m not sure I applied enough pressure to actuate the spring (access may be a limiting factor in-situ), and I was using an extremely fine gauge of wire. I doubt it would have the stiffness to push in.

Would a stalk lug work to provide added stiffness to the conductor, or does this terminal expect something malleable?

That was my initial thought, but there’s little to no give in the orange button. If anything it only felt like a little play you get between loosely coupled mechanical pieces. That’s when I tried operating it like a clamp and broke one off.

Pulling around 200W on average.

- 100W for the server. Xeon E3-1231v3 with 8 spinning disks + HBA, couple of sata SSD’s

- ~80W for the unifi PoE 48 Pro switch. Most of this is PoE power for half a dozen cameras, downstream switches and AP’s, and a couple of raspberry pi’s

- ~20W for protectli vault running Opnsense

- Total usage measured via Eaton UPS

- Subsidised during the day with solar power (Enphase)

- Tracked in home assistant

Looks like home assistant

For storage redundancy RAID 5 is not recommended, particularly as you get to high capacity drives (think >8TB). I think the rating to consider is URE (unrecoverable read error, usually 1 in 10^14 bits read).

Once a drive inevitably fails and you are forced to resilver the array to avoid data loss. During the resilver the healthy disks are running at 100%, reading every bit of data they have to complete the parity calculation and determine what data is missing. The chances of encountering a URE on another drive is a near certainty at high capacities as the total number of bits read exceeds the URE rating. As result the resilver would fail and the array would be lost.

RAID 6 as a minimum (2 drive redundancy), although a popular option now (and the layout I use) is mirrored vdevs.

Edit: Consider TrueNAS for NAS software. I have been using it for 10 years and it is absolutely rock solid. 25TB usable storage across 4x mirrored vdevs. I run it as a VM inside Proxmox with 4 logical cores on a 10 year old Xeon with 16GB RAM for the VM (I run ECC as was recommended at the time, but whether it’s still considered necessary I’m not certain).

I would also recommend getting an LSI HBA (host bus adapter) like the 9207-8i flashed to IT mode (it must not be in raid mode, let TrueNAS manage the disks directly). This simplifies passing through all the disks to a VM.

The options I’m looking at have PCIe 4 and seem to be gen 2? Epyc 7282 or 7302.

I think this is where I'm headed. Is there anything to consider with Threadripper vs Epyc? I'm seeing lots of CPU/MOBO/RAM combo's on ebay for 2nd gen Epyc's. Many posts on reddit confirming the legitimacy of particular sellers, plus paypal buy protections have me tempted.

First seeing this on my home feed on Jan 7th. Relieved to find the post is 5 days old…