seems like garbage to me

the promptfondlers that make their way into our threads sometimes try to brag about how the LLM is the only way to do basic editor tasks, like wrapping symbols in brackets or diffing logs. it’s incredible every time

me too. this heel turn is disappointing as hell, and I suspected fuckery at first, but the video excerpts Rebecca clipped and Conover’s actions on Twitter since then make it pretty clear he did this willingly.

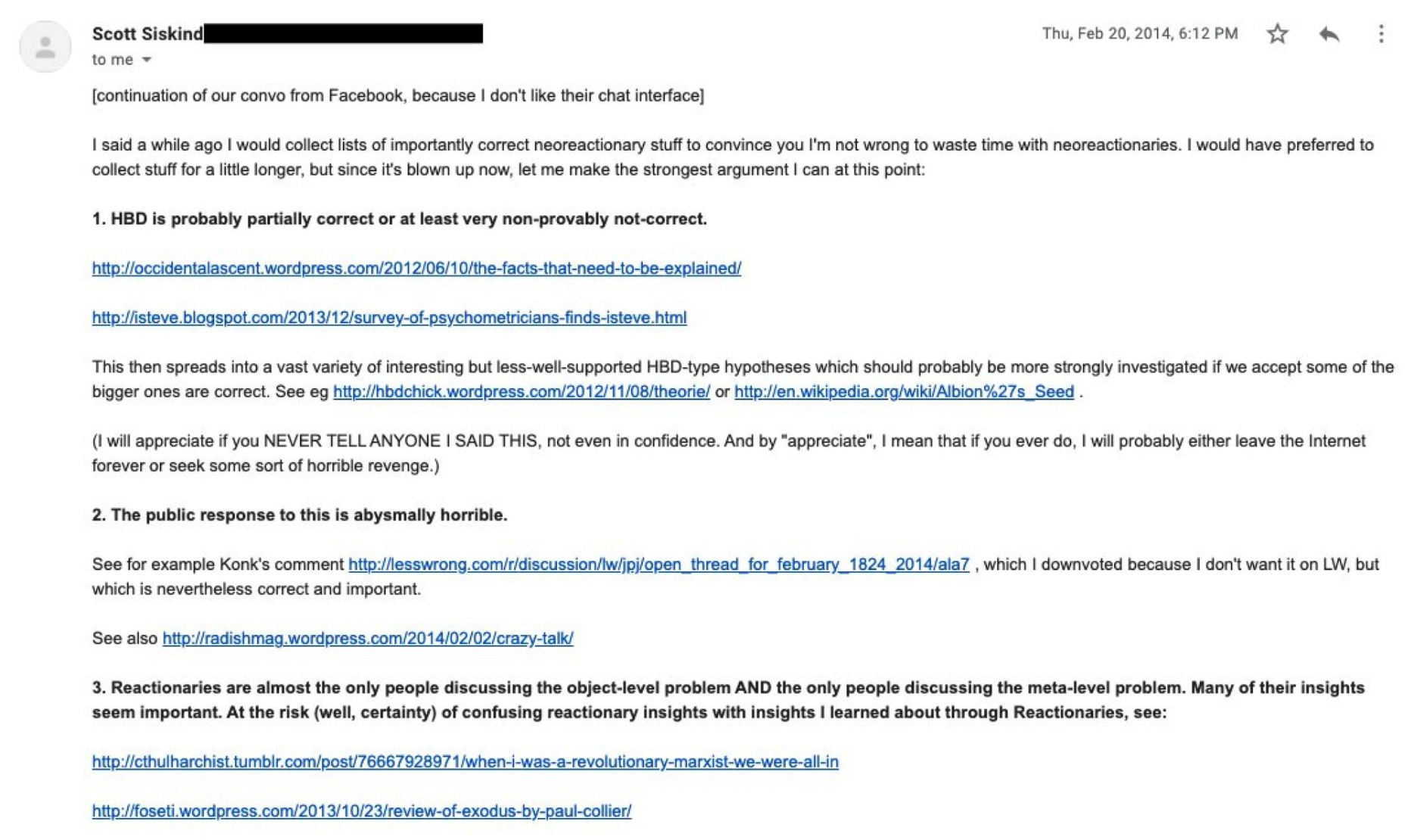

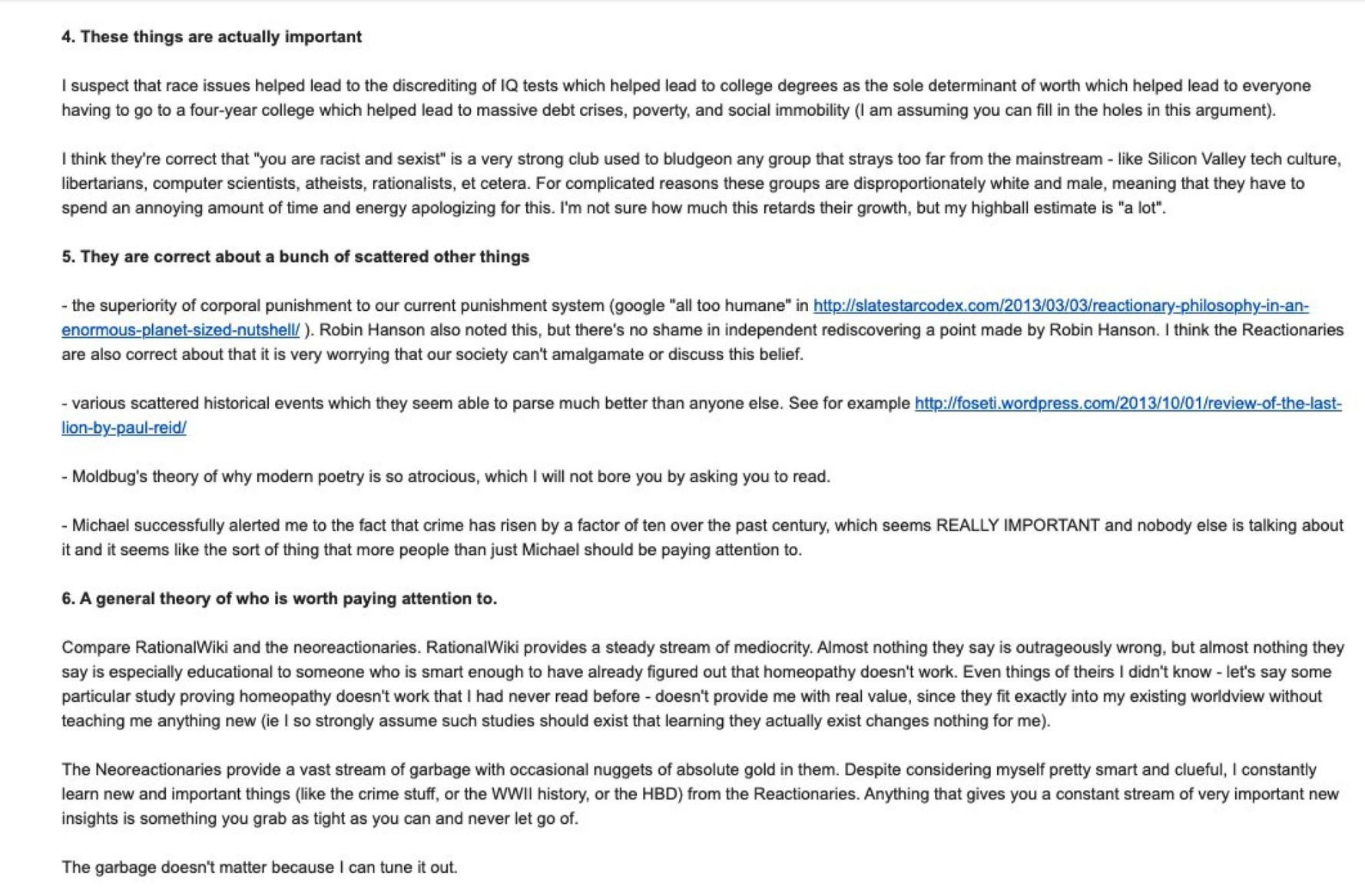

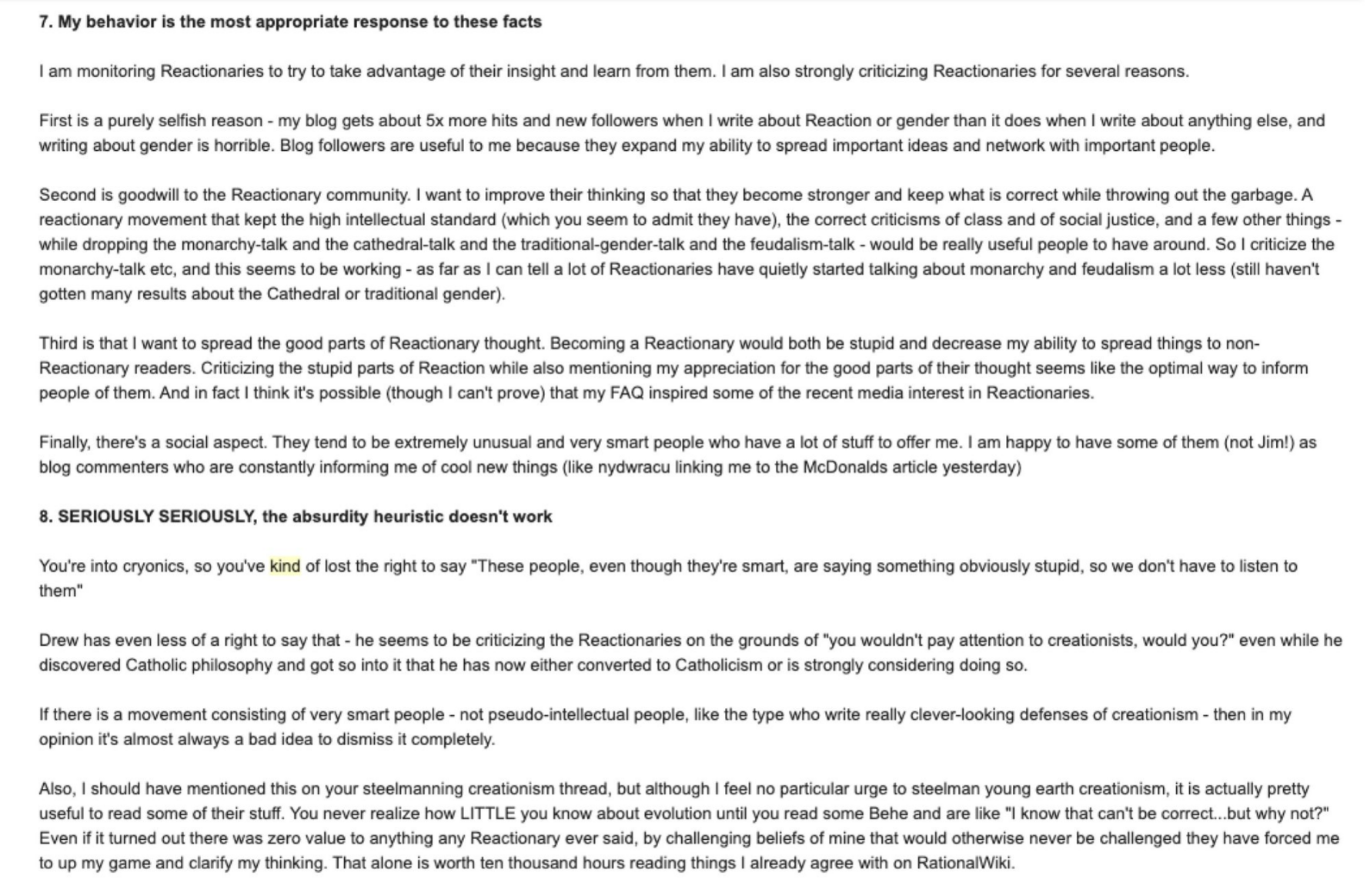

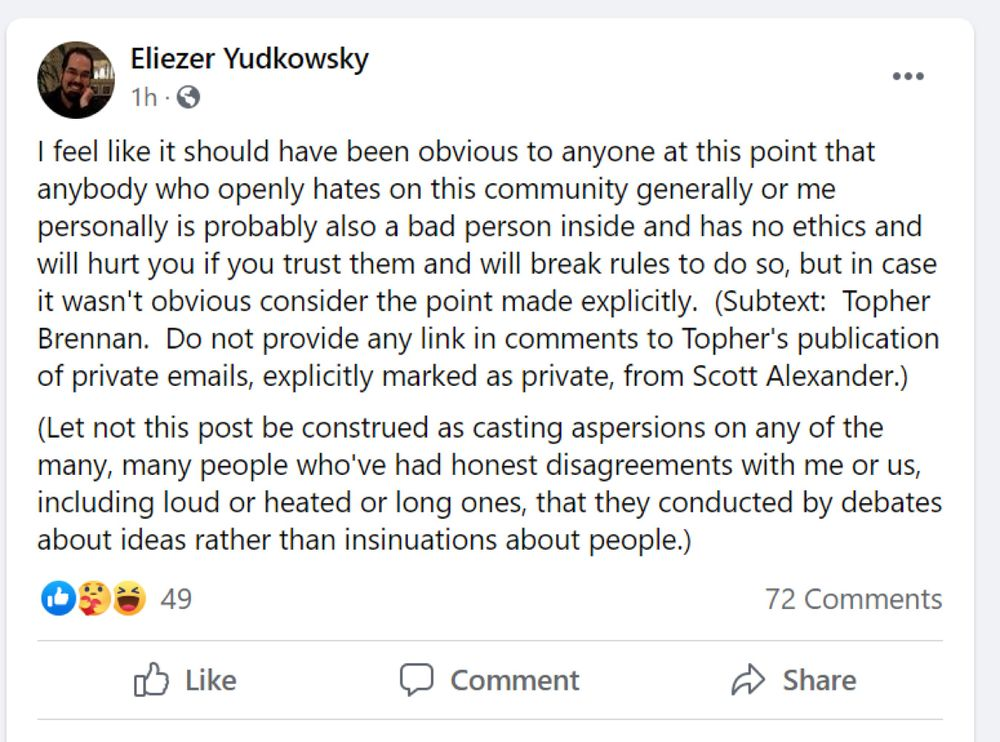

also, fucking ew:

Needs to be put in it’s place like a misbehaving dog, lol

why do AI guys always have weird power fantasies about how they interact with their slop machines

given your posts in this thread, I don’t think I trust your judgement on what less annoying looks like

everybody’s loving Adam Conover, the comedian skeptic who previously interviewed Timnit Gebru and Emily Bender, organized as part of the last writer’s strike, and generally makes a lot of somewhat left-ish documentary videos and podcasts for a wide audience

5 seconds later

we regret to inform you that Adam Conover got paid to do a weird ad and softball interview for Worldcoin of all things and is now trying to salvage his reputation by deleting his Twitter posts praising it under the guise of pseudo-skepticism

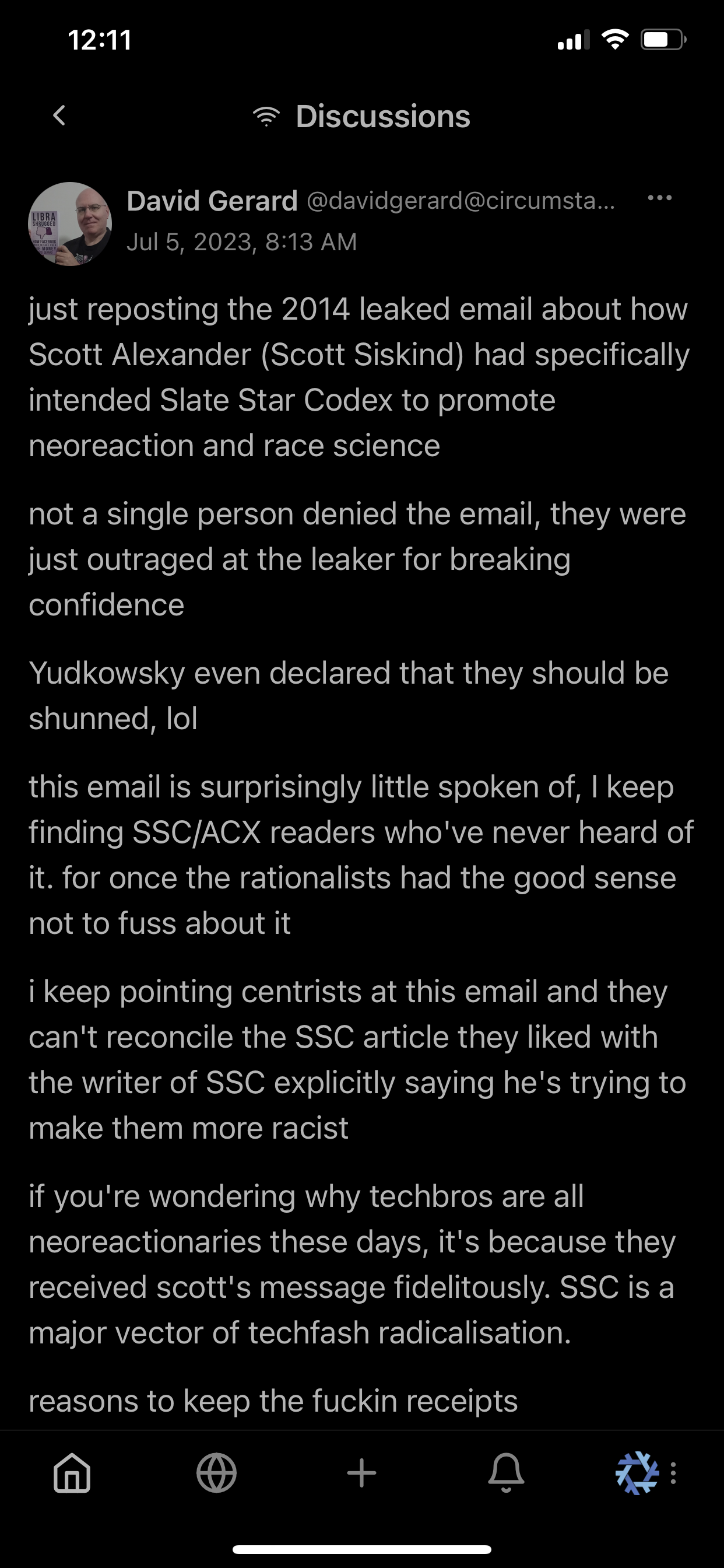

I’m gonna do something now that prob isn’t that allowed, nor relevant for the things we talk about

I consider this both allowed and relevant, though I unfortunately can’t sign it myself

we sincerely hope not

it was some shitty follow-up to your joke so unfunny it made your post less funny just by being under it. pull this thread from mastodon and chances are you’ll see it if you really want to

anyway if you want a laugh, they threw a tantrum and reported your post because we deleted theirs:

their joke was in that exact tone too because they’re a comedy black hole

it’s really weird that this turned into a tantrum where you tried to report other users for their jokes???

wow, your posts really aren’t good

if it’s undisclosed, it’s obvious from the universally terrible quality of the code, which wastes volunteer reviewers’ time in a way that legitimate contributions almost never do. the “contributors” who lean on LLMs also can’t answer questions about the code they didn’t write or help steer the review process, so that’s a dead giveaway too.