this post was submitted on 24 Jul 2024

37 points (97.4% liked)

LocalLLaMA

2249 readers

1 users here now

Community to discuss about LLaMA, the large language model created by Meta AI.

This is intended to be a replacement for r/LocalLLaMA on Reddit.

founded 1 year ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

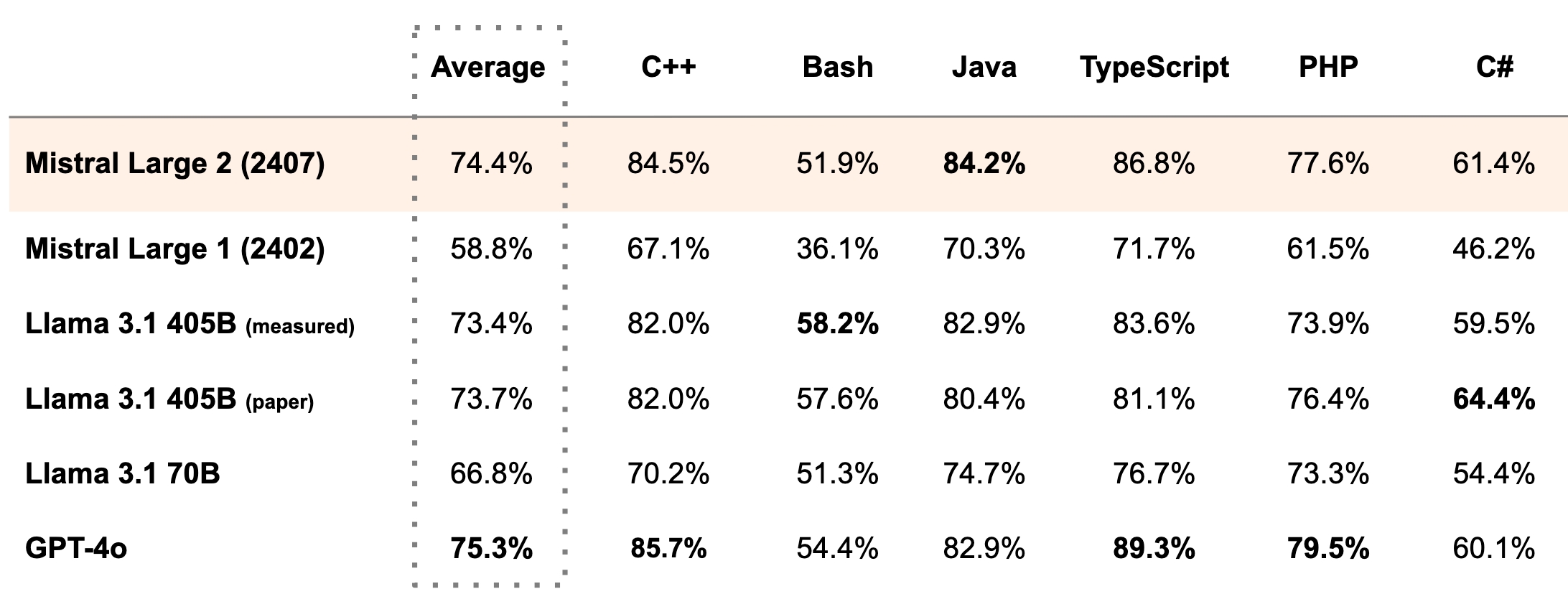

From what I've seen, it's definitely worth quantizing. I've used llama 3 8B (fp16) and llama 3 70B (q2_XS). The 70B version was way better, even with this quantization and it fits perfectly in 24 GB of VRAM. There's also this comparison showing the quantization option and their benchmark scores:

Source

To run this particular model though, you would need about 45GB of RAM just for the q2_K quant according to Ollama. I think I could run this with my GPU and offload the rest of the layers to the CPU, but the performance wouldn't be that great(e.g. less than 1 t/s).