Today I am very excited to share with you AutoGen - a new framework for enabling next generation LLM applications.

This new process published by Microsoft Research Blog details a method on how to easily and efficiently deploy agentic LLMs across your workflows.

AutoGen

It requires a lot of effort and expertise to design, implement, and optimize a workflow that can leverage the full potential of large language models (LLMs). Automating these workflows has tremendous value. As developers begin to create increasingly complex LLM-based applications, workflows will inevitably grow more intricate. The potential design space for such workflows could be vast and complex, thereby heightening the challenge of orchestrating an optimal workflow with robust performance.

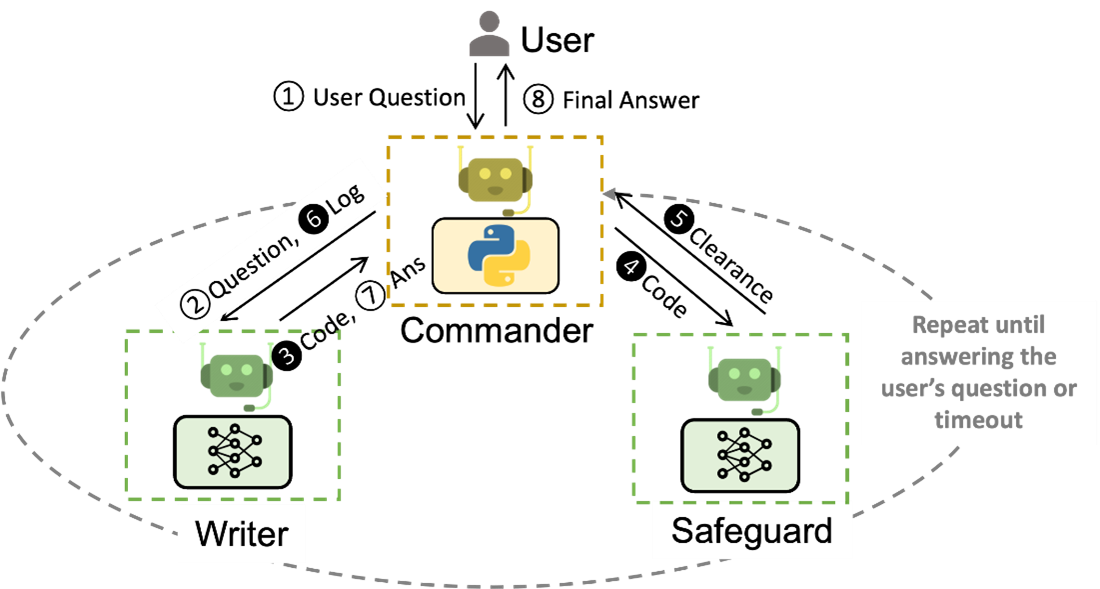

AutoGen is a framework for simplifying the orchestration, optimization, and automation of LLM workflows. It offers customizable and conversable agents that leverage the strongest capabilities of the most advanced LLMs, like GPT-4, while addressing their limitations by integrating with humans and tools and having conversations between multiple agents via automated chat.

With AutoGen, building a complex multi-agent conversation system boils down to:

- Defining a set of agents with specialized capabilities and roles.

- Defining the interaction behavior between agents, i.e., what to reply when an agent receives messages from another agent.

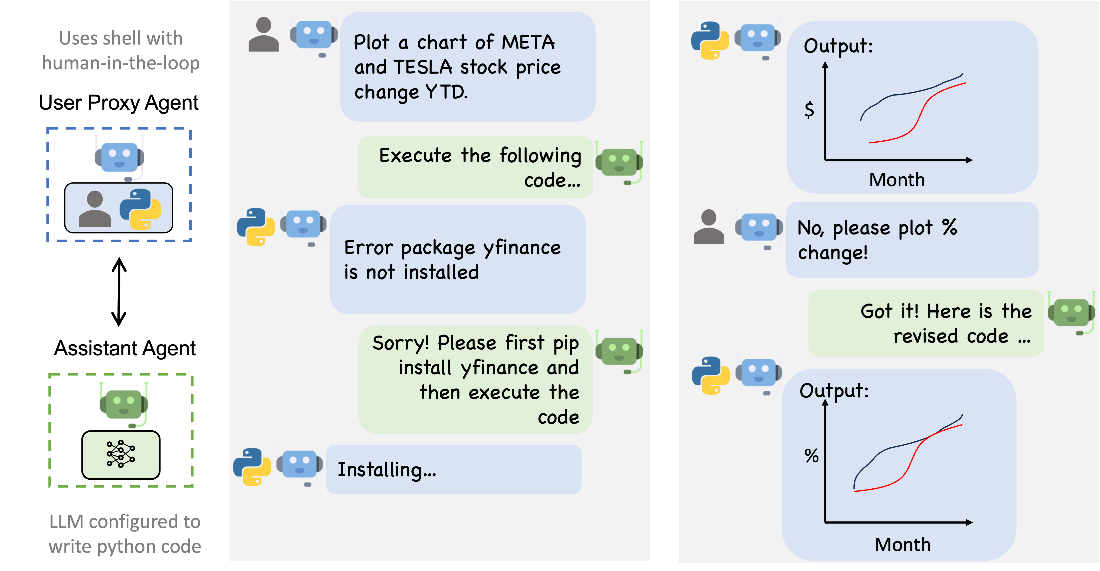

Both steps are intuitive and modular, making these agents reusable and composable. For example, to build a system for code-based question answering, one can design the agents and their interactions as in Figure 2. Such a system is shown to reduce the number of manual interactions needed from 3x to 10x in applications like supply-chain optimization(opens in new tab). Using AutoGen leads to more than a 4x reduction in coding effort.

The agent conversation-centric design has numerous benefits, including that it:

- Naturally handles ambiguity, feedback, progress, and collaboration. Enables effective coding-related tasks, like tool use with back-and-forth troubleshooting.

- Allows users to seamlessly opt in or opt out via an agent in the chat.

- Achieves a collective goal with the cooperation of multiple specialists.

Getting Started

AutoGen (in preview) is freely available as a Python package. To install it, run

pip install pyautogen

You can quickly enable a powerful experience with just a few lines of code:

import autogen

assistant = autogen.AssistantAgent("assistant")

user_proxy = autogen.UserProxyAgent("user_proxy")

user_proxy.initiate_chat(assistant, message="Show me the YTD gain of 10 largest technology companies as of today.")

# This triggers automated chat to solve the task

Check examples for a wide variety of tasks: https://microsoft.github.io/autogen/docs/Examples/AutoGen-AgentChat

Learn More

I feel like I've been mentioning this a lot lately, but agentic LLMs and emergent AI tooling frameworks like these are what will to return us the most value. If you're looking to expand your horizons beyond just chatting with LLMs, integrating agentic tools is an interesting topic to explore. There is much to be built in this space of exciting AI!

Someone explain to me why there are so many frameworks focused on LLM-based "agents" (LangChain, {{guidance}}, and now whatever this is) and how these are practically useful, when I have yet to find a model that can even successfully perform a simple database query to answer an easy question (searching for one or two items by keyword, retrieving their quantity, and adding the quantities together if applicable) regardless of the model, prompt template, and function API used.