this post was submitted on 27 Nov 2024

212 points (94.5% liked)

Firefox

20135 readers

21 users here now

A place to discuss the news and latest developments on the open-source browser Firefox

founded 5 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

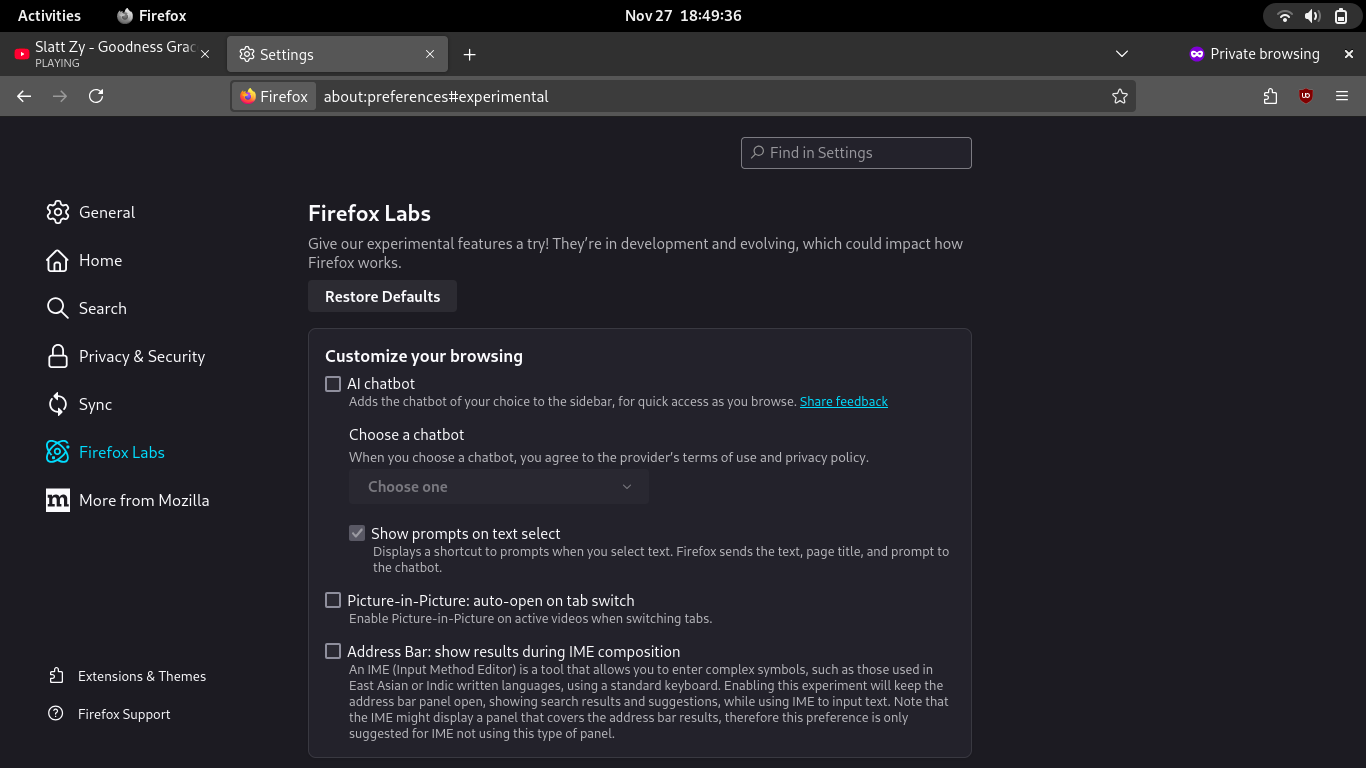

It gives you many options on what to use, you can use Llama which is offline. Needs to be enabled though about:config > browser.ml.chat.hideLocalhost.

and thus is unavailable to anyone who isn't a power user, as they will never see a comment like this and about:config would fill them with dread

Lol, that is certainly true and you would need to also set it up manually which even power users might not be able to do. Thankfully there is an easy to follow guide here: https://ai-guide.future.mozilla.org/content/running-llms-locally/.

deleted by creator