We still restarting 3 Mile Island? I hope so but not for AI.

Technology

This is the official technology community of Lemmy.ml for all news related to creation and use of technology, and to facilitate civil, meaningful discussion around it.

Ask in DM before posting product reviews or ads. All such posts otherwise are subject to removal.

Rules:

1: All Lemmy rules apply

2: Do not post low effort posts

3: NEVER post naziped*gore stuff

4: Always post article URLs or their archived version URLs as sources, NOT screenshots. Help the blind users.

5: personal rants of Big Tech CEOs like Elon Musk are unwelcome (does not include posts about their companies affecting wide range of people)

6: no advertisement posts unless verified as legitimate and non-exploitative/non-consumerist

7: crypto related posts, unless essential, are disallowed

For a lot of computing workloads, including AI inference (and training), the user won't notice the difference if it's being done in a different country.

With the US's '100% tariffs on Taiwanese chips' to be taken 'seriously, but not literally', at some point it makes sense to build additional 'AI' capacity in Canada instead.

I tried to find additional info on the report's 're-allocating a considerable portion of [its] projected international spend to the US', but I am unable to find the original report, to see if there were additional pages.

Whoops

Oh thank fuck. Finally some steps away from AI. It was starting to feel like everything was AI all the time.

For the last year or two AI has been the buzzword of the day. Anyone not investing in AI is considered dinosauric. Just like cloud was 5 to 10 years ago.

Only the cycle has happened a little more quickly this time. AI was supposed to produce immediate revolution, and we are seeing the immediate results are... Just okay.

Google spends billions building an AI that takes 10x more power per query than a standard search, only so it can tell people to superglue their pizza together and jump off the Golden Gate Bridge when they are depressed.

Copilot 365 costs about as much as an E3 license, so turning it on basically doubles your monthly spend with Microsoft. I don't see it doubling anybody's productivity.

AI is like cloud. There are some places where it makes sense, where it can be helpful, where it can save time or help do difficult jobs. That is not everywhere doing everything for everybody, and I think perhaps some of the world is starting to realize that.

I think perhaps some of the world is starting to realize that.

Finally!

If only they'd programmed the AI to tell them this sooner.

I think local compute will kill these huge data centers for AI. It’s amazing what you can do with free tools like ollama or rag agents like n8n. Even on a business laptop with only 16GB of ram. If you’ve got a 4090 at home in your gaming pc and some big ram sticks - well, you’d be surprised at what some models can do (and how quickly they can respond).

You all know how the internet works - in a short time someone’s going to put together a free tool that’s as easy as “click this button to install” and it’ll do 80% of what ChatGPT can do. ie probably enough for the average user - for free.

So how are they going to recoup all these billions spent on data centers if peoples personal computers can mostly do the same thing? How do they monetize your information and sell you ads if it’s all done locally?Go download one and ask questions-sure it’s not perfect but it’s surprisingly good locally hosted.

I think the people spending these billions are starting to realize that…. Meanwhile I think this keeps video card prices high unfortunately…

Unfortunately I think most businesses will still prefer that their AI solution is hosted by a company like OpenAI rather than maintaining their own. There's still going to be a need for these large data centers, but I do hope most people realize that hosting your own LLM isn't that difficult, and it doesn't cost you your privacy.

The cost is insane though. I think there’s a disconnect between what they want and what they can afford. I think it’s like a 10x adder per user license to go from regular office 356 to a copilot enabled account. I know my company wants it hosted in the cloud - but we aren’t going to pay the going rates. It’s insane.

Meh we’ll see. But I do wonder what happens when they get packaged up easier as a program.

Anyone running a newer MacBook Pro can install Ollama and run it with just a few commands:

brew install ollama

ollama run deepseek-r1:14b

Then you can use it at the terminal, but it also has API access, so with a couple more commands you can put a web front end on it, or with a bit more effort you can add it to a new or existing app/service/system.

Interesting article.

Data center buildouts take about three to six years to complete, and the largest hyperscale facilities can easily cost several billion dollars, meaning that these moves are extremely forward-looking. You don’t build a data center for the demand you have now, but for the demand you expect further down the line. This suggests that Microsoft believes its current infrastructure (and its likely scaled-back plans for expansion) will be sufficient for a movement that CEO Satya Nadella called a "golden age for systems" less than a year ago.

To explain here, TD Cowen is effectively saying that Microsoft is responding to a "major demand signal" and said "major demand signal" is saying "you do not need more data centers." Said demand signal that Microsoft was responding to, in TD Cowen's words, is its "appetite for capacity" to provide servers to OpenAI, and it seems that said appetite is waning, and Microsoft no longer wants to build out data centers for OpenAI.

I believe the reason Microsoft is cutting back is that it does not have the appetite to provide further data center expansion for OpenAI, and it’s having doubts about the future of generative AI as a whole. If Microsoft believed there was a massive opportunity in supporting OpenAI's further growth, or that it had "massive demand" for generative AI services, there would be no reason to cancel capacity, let alone cancel such a significant amount.

Not too put too fine a point on it, but the majority of Ed Zitron's articles are deeply researched and very interesting.

I read somewhere someone supposed it had to do with SoftBank building out data centers to host this nonsense.

Which, given the history of SoftBank's investing, is utterly hilarious and appropriate.

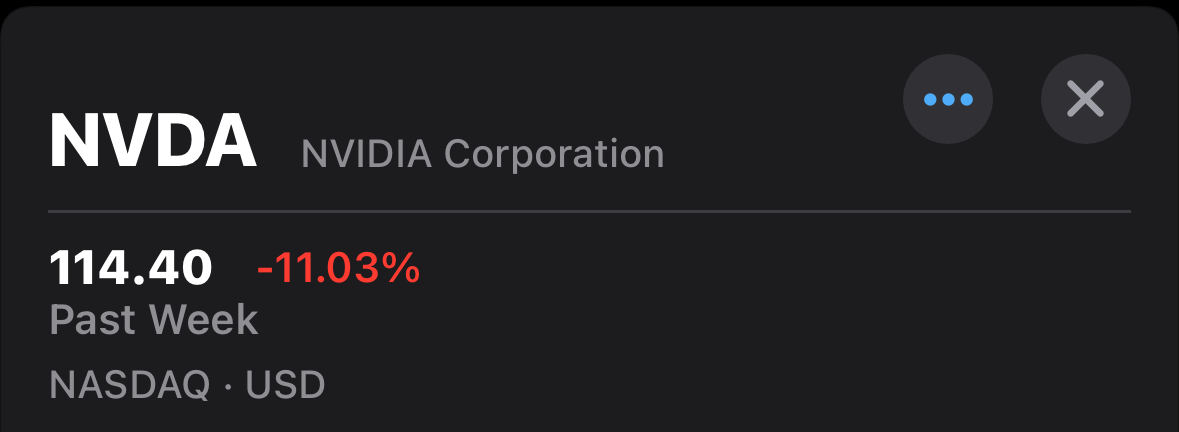

Isn’t it a volatile time to invest in something that could have very major price swings?