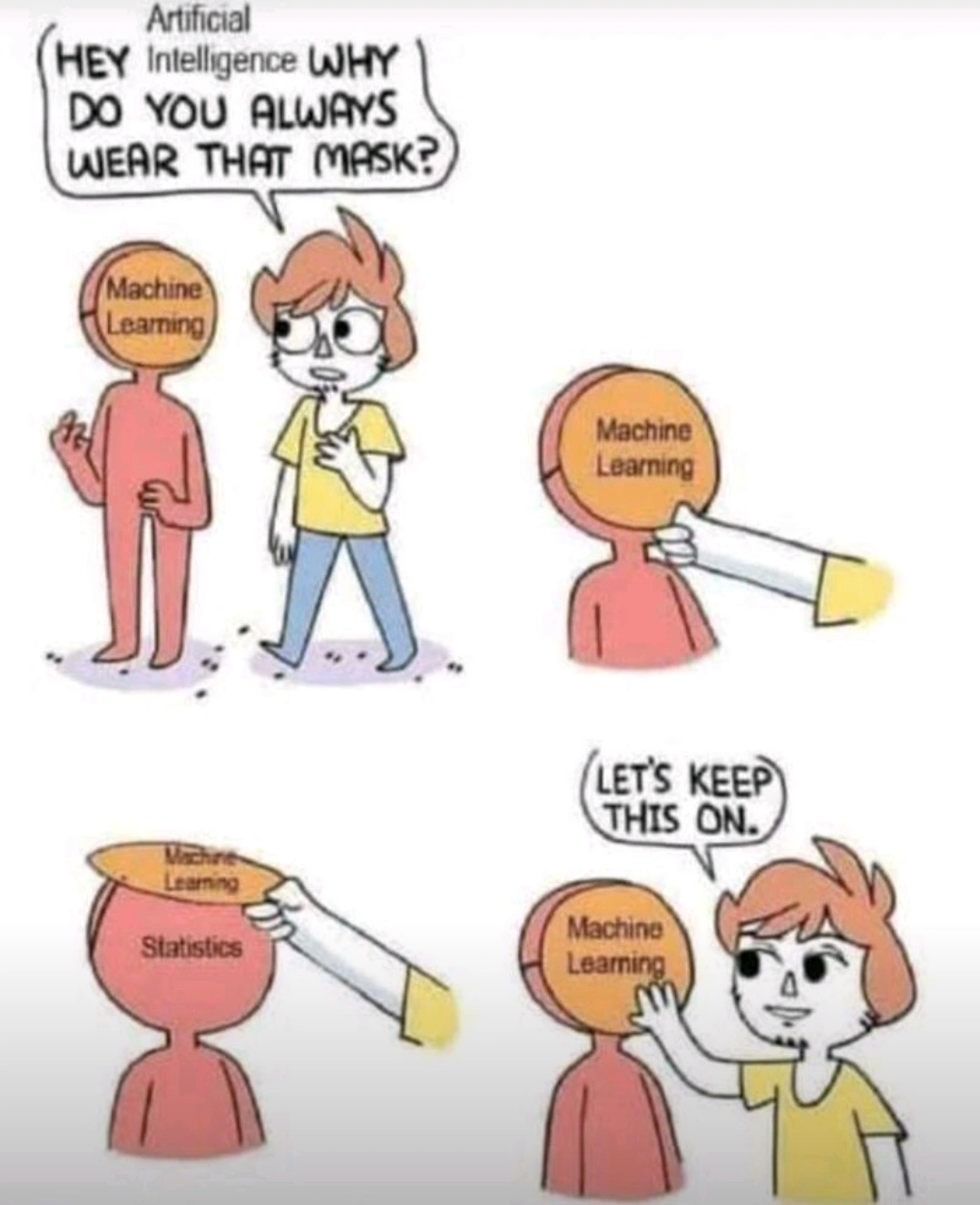

Well, lots of people blinded by hype here.. Obv it is not simply statistical machine, but imo it is something worse. Some approximation machinery that happen to work, but gobbles up energy in cost. Something only possible becauss we are not charging companies enough electricity costs, smh.

Science Memes

Welcome to c/science_memes @ Mander.xyz!

A place for majestic STEMLORD peacocking, as well as memes about the realities of working in a lab.

Rules

- Don't throw mud. Behave like an intellectual and remember the human.

- Keep it rooted (on topic).

- No spam.

- Infographics welcome, get schooled.

This is a science community. We use the Dawkins definition of meme.

Research Committee

Other Mander Communities

Science and Research

Biology and Life Sciences

- !abiogenesis@mander.xyz

- !animal-behavior@mander.xyz

- !anthropology@mander.xyz

- !arachnology@mander.xyz

- !balconygardening@slrpnk.net

- !biodiversity@mander.xyz

- !biology@mander.xyz

- !biophysics@mander.xyz

- !botany@mander.xyz

- !ecology@mander.xyz

- !entomology@mander.xyz

- !fermentation@mander.xyz

- !herpetology@mander.xyz

- !houseplants@mander.xyz

- !medicine@mander.xyz

- !microscopy@mander.xyz

- !mycology@mander.xyz

- !nudibranchs@mander.xyz

- !nutrition@mander.xyz

- !palaeoecology@mander.xyz

- !palaeontology@mander.xyz

- !photosynthesis@mander.xyz

- !plantid@mander.xyz

- !plants@mander.xyz

- !reptiles and amphibians@mander.xyz

Physical Sciences

- !astronomy@mander.xyz

- !chemistry@mander.xyz

- !earthscience@mander.xyz

- !geography@mander.xyz

- !geospatial@mander.xyz

- !nuclear@mander.xyz

- !physics@mander.xyz

- !quantum-computing@mander.xyz

- !spectroscopy@mander.xyz

Humanities and Social Sciences

Practical and Applied Sciences

- !exercise-and sports-science@mander.xyz

- !gardening@mander.xyz

- !self sufficiency@mander.xyz

- !soilscience@slrpnk.net

- !terrariums@mander.xyz

- !timelapse@mander.xyz

Memes

Miscellaneous

We're in the "computers take up entire rooms in a university to do basic calculations" stage of modern AI development. It will improve but only if we let them develop.

Moore's law died a long time ago, and AI models aren't getting any more power efficient from what I can tell.

Then you haven't been paying attention. There's been huge strides in the field of small open language models which can do inference with low enough power consumption to run locally on a phone.

My biggest issue is that a lot of physical models for natural phenomena are being solved using deep learning, and I am not sure how that helps deepen understanding of the natural world. I am for DL solutions, but maybe the DL solutions would benefit from being explainable in some form. For example, it’s kinda old but I really like all the work around gradcam and its successors https://arxiv.org/abs/1610.02391

Moldy Monday!

I wouldn't say it is statistics, statistics is much more precise in its calculation of uncertanties. AI depends more on calculus, or automated differentiation, which is also cool but not statistics.

Just because you don't know what the uncertainties are doesn't mean they're not there.

Most optimization problems can trivially be turned into a statistics problem.